- Fall 2019

- Volume 1

- Issue 1

As Large As Life: Using Artificial Intelligence in Cancer Care

Key Takeaways

- IBM's Watson struggled with cancer treatment recommendations, revealing AI's limitations in complex medical applications.

- AI systems like Google's LYNA and ScreenPoint's Transpara have shown high accuracy in detecting cancer from medical images.

Using artificial intelligence to analyze huge stores of information known as big data boosts the effectiveness of cancer diagnosis and treatment.

In 2014, three years after the artificial intelligence system that IBM calls Watson thrashed two humans in a game of “Jeopardy!”, the company began selling Watson’s services as a virtual oncologist, an intelligent machine that would look at patient records and make treatment recommendations.

Some predicted that Watson would clobber oncologists for much the same reason it bested game show contestants —it could retain more data than any person. Before recommending treatment, it could “remember” every word from every cancer study ever published and consider every finding that applied to a particular patient.

In reality, according to a 2017 investigation published by the news website Stat, Watson struggled “with the basic step of learning about different kinds of cancers” and disappointed many of the hospitals and practices that purchased its services, sometimes by recommending “unsafe and incorrect” cancer treatments.

Watson is likely the best-known example of artificial intelligence, or AI, scanning big data to achieve “deep learning” in complex subjects — meaning a computerized system that analyzes enormous stores of information and recognizes patterns from the data on its own. The struggles of the IBM system illustrate a tendency to overestimate technologies. Yet lesser-known exercises in big data analysis have improved the care that many patients with cancer receive, and a string of promising study results and new business openings suggest that AI could soon play a significant role in both of the most vital parts of cancer care: finding the cancer and determining the treatment.

“AI is never going to fully replace physicians, but researchers have made impressive strides in developing AI that specializes in particular tasks, like reading images from CT scans or pathology slides,” says Ryan Schoenfeld, vice president of scientific research at the Mark Foundation for Cancer Research, a New York City-based philanthropy. “I expect we will continue to see further advances in this area.”

BIGGER DATA, BETTER DIAGNOSES

The Food and Drug Administration (FDA) has already cleared at least one product that uses AI to analyze mammography. The Transpara system, from a Dutch company called ScreenPoint Medical, has performed well in a wide variety of tests. The biggest challenge pitted Transpara against 101 radiologists in the analysis of 2,652 digital mammograms and found that the AI matched the average performance of the humans.

Such results suggest that ScreenPoint’s AI, acting alone, would already do a better job reading mammograms than about half of all radiologists, but the FDA has cleared the system to help these doctors, not to replace them.

The South Korean government has approved a similar mammogram-analysis system from Lunit. The company also sells Lunit Insight CRX, an AI service that can search for lung cancer in CT scans and is used by hospitals in Mexico, Dubai and South Korea.

Another AI system that helps radiologists detect lung cancer in CT scans comes from an Amsterdam-based company called Aidence. That system, dubbed Veye Chest, is approved for use in the EU and is already deployed at 10 hospitals in the Netherlands, the U.K. and Sweden. Here in the U.S., a number of organizations are developing cancer-spotting AI systems, many of which have also performed well in trials.

Earlier this year, researchers from Google compared their AI against six radiologists in detecting lung cancer from CT scans. The model, which had learned to spot lung cancer by examining 45,856 CT scans from the National Cancer Institute (NCI) and Northwestern University, matched the doctors in cases where both person and machine examined current and older CT scans of the same patient. When no historical images were available and diagnosis hinged entirely on images taken on a single day, the AI beat the physicians, producing 11% fewer false positives and 5% fewer false negatives.

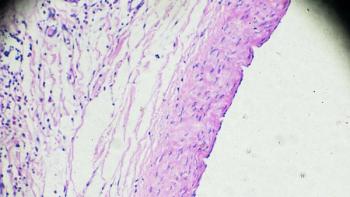

That success came less than a year after Google completed two tests of an AI system designed to spot evidence of breast cancer metastases in images of biopsied lymph nodes. Finding such metastases is a notoriously difficult task for humans. Previous research found that the detection of small metastases on individual slides can be as low as 38%. Google’s tool, Lymph Node Assistant (LYNA), fared considerably better. In the first test, LYNA was able to distinguish a slide with cancer from a cancer-free slide 99% of the time. It was also able to accurately determine the location of the cancers, even those that were too small to be consistently identified by pathologists. In the second study, Google compared how pathologists performed with and without help from LYNA and found that artificial assistance not only improved accuracy but also halved the amount of time needed to review each slide.

Google isn’t the only large organization that’s developing or testing such technology. Many of the nation’s major cancer centers are working with universities, high-tech companies in Silicon Valley or both to develop AI that can help radiologists and pathologists spot tumors faster and more reliably. They even hold competitions to encourage boldness and innovation.

“Machine learning is a very powerful tool, but it requires a huge amount of data,” says Tony Kerlavage, who runs one of the nation’s largest repositories for cancer data, the NCI’s Center for Biomedical Informatics and Information Technology. “Researchers have struggled in the past find enough data, all in the same place, all in the same format, and that has hindered progress. Our goal is to make truly massive amounts of quality data accessible to everyone.”

Such efforts, if successful, would be great news for patients, because there’s plenty of room for improvement in analyzing pathology mammograms and CT scans. Current mammography practices fail to see about 20% of all breast cancers (false-negative results) and frequently identify healthy tissue as cancerous (false-positive results): About half of women who get annual mammograms for 10 years will receive at least one false positive. Lung cancer screening, which is typically done via low-dose computed tomography scans, may produce exponentially more false positives than true positives. In a study of 2,106 Veterans Affairs patients who underwent recommended lung cancer screening, radiologists identified nodules that required follow-up in 1,184 patients, but just 31 had lung cancer.

SELECTING TREATMENTS

The term “big data” was coined in the 1990s to describe a deluge of information made newly available by two major changes. First, a wide range of things that had once been recorded exclusively on paper, film or magnetic tape — along with a variety of information that had never been stored — started being saved on computers. Second, those computers, which had once been as isolated from the outside world as file cabinets, were getting hooked up to company intranets and the internet.

Big data is so big that the numbers are hard to fathom. Former Google CEO Eric Schmidt estimated that by 2010, people were producing as much searchable information every two days as mankind had collectively produced from the dawn of civilization until 2003. This includes social media, such as tweets and instant messages that may not contribute to scientific progress, but the data with scientific meaning has proved very valuable.

Among the most important information produced early in the big data era came from the Human Genome Project (HGP) and the private-sector equivalent undertaken by Celera Genomics. The HGP took 13 years and $3 billion to sequence the 3.3 billion DNA pairs in a complete genome, and the dividends were huge. Before the HGP, researchers had found genetic causes or risk factors for about 60 conditions. A decade after its completion, they upped that figure to about 5,000, and the number of drugs for which use was at least partly governed by genetic testing exceeded 100.

These advances have been particularly helpful for patients with cancer. The FDA has approved more than 50 genetically targeted cancer therapies, and researchers are working on countless others. Oncologists should already be ordering genetic tests on many if not most tumor samples and using results to guide treatment. Genetic tests and targeted medications constitute a very real benefit for patients, but they don’t represent the truly revolutionary leap that optimists promised at the beginning of the big data age.

The hope then was that medical practices would quickly transition to electronic health record (EHR) systems that would facilitate significant breakthroughs by letting researchers analyze many factors associated with real-world medical outcomes. The reality proved disappointing,

and not just because doctors resisted the move to time- consuming EHRs the way most kids resist eating vegetables.

Data analysis has traditionally required what is called structured data — i.e., data entered in exactly the right box in exactly the right way. Spreadsheets and databases could not analyze handwritten notes, digital images, PDFs of test results or any of the types of unstructured data that EHRs stored.

The only way to make some of that information useful was to pay humans to analyze unstructured text, convert it into the proper words and enter it into the proper fields. Fortunately, software such as natural language processing (think Amazon’s Alexa) is starting to make things easier — but just a little. Meanwhile, the digitization of radiology and pathology results has greatly facilitated the development of AI strategies.

“We are now using AI to help with data collection, but the technology isn’t to the point where you click a button and you get all the information reliably extracted from source material and entered properly in our database. We’re not even at that point with plain but unstructured text, let alone PDFs or images, but we are getting closer,” says Dr. Robert S. Miller, medical director of CancerLinQ, a nonprofit subsidiary of the American Society of Clinical Oncology (ASCO) that collects data from participating members.

AN INNOVATIVE APPROACH

CancerLinQ is primarily designed as a quality improvement tool. It allows practices to compare how they stack up against each other, identify where they fall short of their peers or fail to follow existing standards of care, and make improvements. This is an unusual strategy for using big data to improve cancer care, and Miller says it has helped participating practices identify areas where they can make significant improvements.

About 100 of the nation’s more than 2,200 hematology/oncology practices have signed up for CancerLinQ, and about half of those are actively participating. Dozens of additional practices have expressed interest in the program, and Miller expects participation to increase sharply now that the information that practices submit can also be used, with no further effort, to qualify for ASCO’s Quality Oncology Practice Initiative certification program.

In addition to helping practices improve their operations, CancerLinQ has begun supplying researchers with data they can analyze, and researchers were quick to take advantage. ASCO’s last annual meeting saw the presentation of five studies that mined CancerLinQ.

One study investigated outcomes among patients with lung cancer who used checkpoint inhibitors despite prior autoimmune disease. The findings revealed that these patients fared about as well as similarly treated patients with lung cancer but no autoimmune disease. It was a potentially important finding because patients with autoimmune disease were excluded from most checkpoint inhibitor trials, so there was little published information about how they respond to these medications.

“We are looking to expand the data that CancerLinQ can track to include more genetic information about both tumor and patient. That will be a challenge because many practices store that data in their EHR as PDFs or even badly photocopied faxes, but we are investigating ways to get structured data from the sequencing labs themselves or from those EHRs that do get data directly from the lab,” Miller says. “As we bring in more molecular data, the chance of seeing unexpected associations with important implications will increase.”

RETHINKING RECOMMENDATIONS

One of the most important implications that a study can uncover is that treatment guidelines called standards of care should be changed for a particular patient population.

Expert committees of organizations such as ASCO and the National Comprehensive Cancer Network periodically review all the current research on each tumor type and tell doctors how to treat every subtype at every stage in the tumor’s progression. Some guidelines strongly recommend a single treatment that works well for nearly every patient, such as the complete surgical removal of a cancer that has yet to spread. In many other cases, guidelines give equal recommendation to multiple treatments, each of which will work in some patients but not in others. Doctors and patients must discuss each option, make a choice and hope they don’t waste valuable time on something that is ineffective.

That risk is particularly relevant when using immuno- therapies known as checkpoint inhibitors. These medications often get the highest recommendations because when they work, they often work far better than alternative medications. For most eligible patients, however, they produce no response.

Many research teams hope to discover the differences between responders and non-responders by analyzing mountains of data, either by running the analyses them- selves or teaching software to look for them. In one of the most promising efforts reported thus far, researchers mined data from a bladder cancer trial that collected an unusual amount of information about participants’ demo- graphics, medical history, tumor biology and immune cells.

The researchers gave their AI 36 points of information on every patient and asked it to devise an algorithm that would separate patients who responded from those who did not. The software eventually found 20 features that, when considered collectively and weighted appropriately, explained 79% of the response variation. Had that software’s algorithm been used to include and exclude trial patients, it would have included all patients who responded to treatment but just 38% of non-responders.

Such results, the study authors note, do not prove that the same algorithm would predict immunotherapy response just as accurately for all patients with cancer or even those with bladder cancer who resemble the study participants, but the findings do suggest that the key to predicting response lies in analyzing far more than the two or three factors that currently qualify patients for immunotherapy.

Another effort to increase response rates by using more data points to distinguish patients and personalize treatment is underway at Cambridge University in the United Kingdom. The team, which received an 8.6 million-pound grant from the Mark Foundation, wants to predict each cancer’s progression and which treatments will work best by integrating information from imaging, biopsies, blood tests and genetic tests.

“Cancer patients already undergo a number of diagnostic tests that collectively provide considerable information, but treatment decisions are usually guided by specific results on one test — like a HER2 mutation — or a general feel for the combined picture. There’s no way that doctors can currently make a decision based upon the exact combination of relevant factors on all those diagnostic tests. We plan to change that,” says Richard Gilbertson, director of the Cancer Research UK Cambridge Centre. “We have a huge amount of patient data here at Cambridge because we have a long history of clinical trials that collect thorough patient data. We also have some very talented mathematicians and computer scientists who can create the algorithms needed to separate signal from noise.”

The Cambridge team may not be testing its individualized treatment recommendations in live patients quite yet, but a team at Columbia University’s Califano Systems Biology Lab is doing just that with its N of 1 trial program.

In a typical trial of a drug, researchers enroll similar patients and give them all the same treatment. Columbia’s project starts with a variety of patients who have exhausted all their treatment options; finds the functional drivers, or “master regulators,” of each patient’s tumor; and looks for any drugs that may disrupt them — not just cancer drugs but also medications approved for any condition and experimental therapies that have reached late-stage trials. Researchers pit the proposed treatment against a tumor sample in the lab or in a mouse. These N of 1 trials do not require patients to take the medications, but, at their oncologist’s discretion and based on drug availability, some patients do try the drugs recommended by the computer.

“We’ve tested 39 drugs so far, and we have seen a remarkable response rate. There have been 60% of objective responses in mouse transplants so far, including both regression and stable disease,” says Andrea Califano, who runs the laboratory. “This is really a proof-of-concept trial, and the concept is that you can test a system for picking treatments rather than testing a particular treatment.”

Articles in this issue

over 6 years ago

Hitting the Spot in Squamous Cell Carcinomaover 6 years ago

Bonded for Lifeover 6 years ago

Putting the Ball In Your Court With Cancer Coachesover 6 years ago

Going to the Dogsover 6 years ago

Testing for Honestyover 6 years ago

Grants Offer Caregivers a Day of Restover 6 years ago

Hiring a Cancer Coach: What You Should Know